“For the ‘Signaling When No One Is Watching,’ basically what we were interested in was motivated by previous research suggesting that people are strongly motivated to signal to others that they’re morally good.”

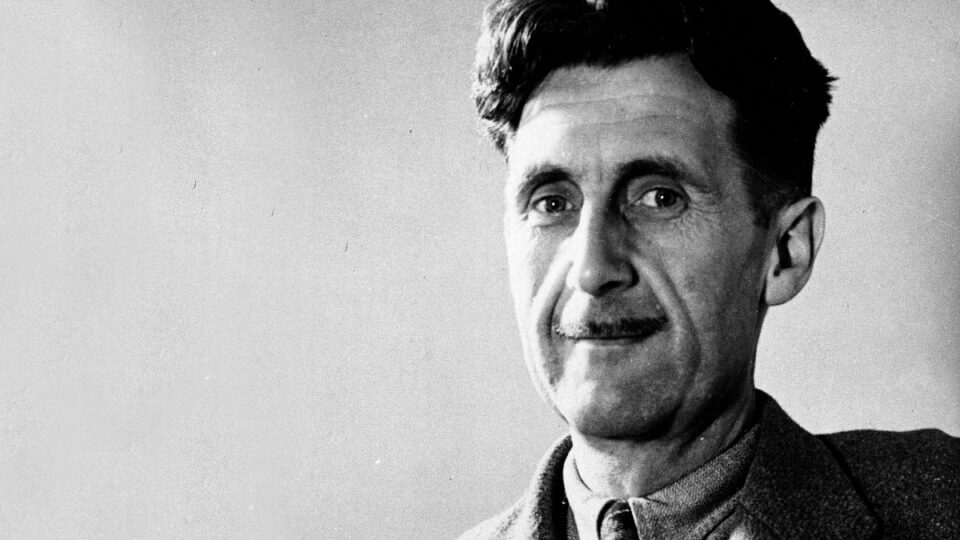

Jillian Jordan completed her Ph.D. in psychology at Yale University in 2018 and is currently a postdoctoral fellow at the Dispute Resolution Research Center at Northwestern University’s Kellogg School of Management. Her research primarily centers around human morality, with a particular focus on how people’s desire to appear moral to their peers affects their decision-making. Later this year, Jordan will begin work as an assistant professor at Harvard Business School. In this interview, she joins Merion West and Kambiz Tavana to discuss her research, particularly two papers “Signaling When No One Is Watching” and “When Do We Punish People Who Don’t?,” as well as their broader implications.

Dr. Jordan, I want to ask you about two papers you were part of. The first is “Signaling When No One Is Watching,” and the second is “When Do We Punish People Who Don’t?” Can you start by walking me through “Signaling When No One Is Watching”?

For the “Signaling When No One Is Watching,” basically what we were interested in was motivated by previous research suggesting that people are strongly motivated to signal to others that they’re morally good. As a result, one way we try to do this is by punishing people, who are bad—in order to sort of convey to others: “I am not bad.” I wouldn’t say that I’m against punishing, and actually you can trust me to behave morally. So this work raises the question of: Are we just thinking about trying to appear moral in the eyes of others? In context, when people are watching us, do we have the explicit desire to make them evaluate us positively? Or, are people unconsciously trying to signal their moral virtue, even in situations where nobody is actually observing them. So do we have a default tendency to implicitly try to appear moral to others that doesn’t necessarily get turned off if we’re alone. So what we wanted to do is try and provide evidence that when nobody’s watching us—and we find out about wrongdoing—one contribution to our moral outrage (and our willingness to punish the wrong thing) is actually the desire to appear moral in the eyes of hypothetical observers. This might take place even though nobody’s actually watching. So that was our motivation.

Is this anything along the lines of what’s known as “The God Effect,” which says that—when someone knows they’re being watched—they behave better than when they are sure nobody’s watching them?

So that is certainly true. I think our results in our paper were building on that observation that we know people are more attuned to trying to have a good reputation and are, therefore, more likely to behave in a moral way when they’re being watched, as compared to when they’re not being watched. We were sort of interested in when people are not being watched: Is their level of desire to appear moral, zero, or is it just lower than when they are being watched? But people still sort of retained some motivation to look good in the eyes of others, even when nobody was watching.

Can you explain how you started going about this as a research project?

Since we know that if nobody is watching—and people are in an anonymous experiment—they’re probably not going to explicitly tell us, “Oh, I was motivated to appear moral in the eyes of others.” Because nobody’s actually watching them, that’s probably not an explicit goal of theirs. So we thought: How can we infer that people are trying to appear moral, even without them being directly aware of that?

And so what we did is we built on this research designed previously used, in which we are giving people the chance to engage in a direct moral behavior, which was sharing money with somebody else. We find that people don’t think it’s as necessary to punish wrongdoers as a way to show that they’re good because they’ve already established whether or not they’re good through their decision of whether or not to share. So, we’d previously found that in that context—where people were being observed and reputation was at stake—it went something like this: If I give you the chance to share money with someone else, you sort of say, “Okay, now I’ve established that I’m morally good, I don’t need to punish a wrongdoer to show that.” And we wanted to ask whether people would still respond to having had the chance to establish that they’re morally good by sharing, which reduced moralistic punishment and moral outrage. And we thought: Does this effect persist even when nobody is watching? That seems like an indication to us that even though they wouldn’t explicitly report that they’re trying to look good to others, they’re implicitly trying to look good to others. As such, if we give them a really effective way to look good, they’re going to be less likely to try to use moralistic punishment as a way to look good, if that makes sense.

We used a lab experiment, in which people knew they were in an experiment, and we gave them a game to participate in that was based on people sharing (or not sharing) money with each other and punishing each other (or not). The lab experiment approach is just nice because we’re able to control it and set up exactly the conditions we want.

When you started this research, was there anything hypothesis you initially had that was proven to be incorrect in the end?

So I think one thing that was interesting is that what we discovered when we first started this research, we weren’t really thinking about individual differences. So whether some individuals were more likely to treat a situation in which nobody’s watching is if they are being watched. So we were just sort of thinking, “Oh, in general, people might act like they’re being watched even when they’re not.” And we ended up finding that there actually were individual differences. So there are some individuals whom we refer to in our paper as a deliberative or reflective individual. They really differentiate between whether reputation is at stake or whether it’s not.

What was the biggest practical outcome of this research?

So that’s a good question. I think one practical takeaway is that social scientists are increasingly realizing that one way we can get people to behave in ways that we think are good is by drawing on their desire for a good reputation. And so there’s a lot of research suggesting like if we tell people there’s a strong social norm to cooperate—or if we tell people that they’re being observed by other people—they’re more cooperative and more moral. And so this is a tool that we can use to encourage moral, pro-social, and cooperative behavior. And our results suggest that for some individuals, these motivations to appear moral and to earn a good reputation persist even if people aren’t actually being watched. And so I think that suggests that we should think about how to encourage people even when they’re not being monitored to tap into their desire to have a reputation. Another implication of the work is that there’s a lot of concern these days about outrage culture and the idea that people are increasingly trying to signal their virtue to others by expressing outrage. Yet this is often not leading to constructive action. So maybe I go online and say that I’m really angry that Trump is putting children in cages, but that’s all I do. I don’t end up donating my money to organizations helping immigrants or take some constructive, costly, meaningful action.

I think our results suggest that people are less motivated to simply express their anger and are more motivated to take constructive action if they have the chance to do so. They expect that they can use the constructive action as a more effective way to appear virtuous. So I think the results suggest if we’re worried about non-constructive outrage expressions—what we need to do is give people the chance to take substantive, constructive action in a context where they expect that to be a really good way for them to signal their virtue.

Are there any other important take-aways from the paper that we haven’t discussed?

I will say one other aspect of the results that we’ve written about that we think is interesting is that people typically think about genuine emotion and virtue signaling as mutually exclusive. In a lot of discussions of people’s desire to appear moral and signal their virtue, we think of this as an alternative to being generous. Either you’re generous or you’re trying to appear virtuous. I think what our results suggest is that because people are implicitly motivated to appear virtuous even when nobody’s watching (and they’re not explicitly thinking about how to look good), virtue-signaling motives can actually contribute to the genuine moral outrage we actually feel. This is true even when nobody’s watching it—and when we’re not explicitly thinking about our reputation. Our results suggest that it’s absolutely possible for virtue-signaling to contribute to our genuine emotion. If you ask, “Is she just virtue signaling” or “Is she actually genuinely outraged,” that might be the wrong question because the answer might be both of those things. Our genuine reactions are shaped by our unconscious virtue-signaling desires. While it is certainly true that sometimes people engage in inauthentic virtue-signaling (where they feel one way but they act like they feel another way), there are also cases where people genuinely feel one way, and yet the reason they feel that way is because they’re trying to signal their virtue implicitly.

Can you talk a bit now about your second paper “When Do We Punish People Who Don’t?”

In this paper, we’re interested in this phenomenon called second order punishment. Second order punishment is when you observe someone behave badly—and you don’t punish them. Now, I think that was wrong, and you should have punished, so I’m going to punish you for not punishing. We’re interested in basically whether second order punishment might be specifically directed at the failure to punish in certain contexts. In particular, you could imagine two ways the scenario I described might play out. One way it might play out is that somebody mistreats you, and you do nothing. Then, I think that was inappropriate, and you should have retaliated, so I’m going to punish you. An alternative could be someone mistreats somebody else, and you do nothing. Then, I think that was inappropriate and you should be punished. What we were interested in testing—and what we found in our research—is that the latter is much more common. So, if someone mistreats you and you do nothing, most people don’t tend to react by wanting to punish you. Whereas if someone mistreats someone else and you do nothing, people are much more likely to want to punish you for not punishing.

I think the basic reason for this is that if someone harms you directly, your choice to punish them and retaliate is sort of a selfish choice in the sense that it’s primarily going to protect you from being harmed in the future by illustrating you’re not somebody to be messed with. It’s sort of a self-focused thing. I think when people retaliate after being harmed directly, they’re angry that something bad happened to them, and they want to react by defending themselves, which is not something that we seem to think you have a moral obligation to do. It’s more like, “You can do it if you want, but you don’t have to do it.” Whereas if you see somebody mistreat somebody else, it’s kind of a pro-social, other-focused thing for you to respond by punishing because you’re defending this other person even though you yourself haven’t been directly harmed. You’re, therefore, revealing that you care about somebody else and are willing to defend them and try and prevent wrongdoing towards others. Therefore, we seem to feel like there is more of an obligation to do that. And, if you decline to do it, it’s more worthy of punishment.

We think there’s more of a normative obligation to punish in the case where somebody else was mistreated, rather than when you were mistreated. I will say that the caveat of that is that we don’t find a ton of second order punishment in either scenario, but we find that there is considerably more second order of punishment if somebody refuses to punish on behalf of others, than if somebody refuses to punish on behalf of themselves.

What was the setting in which you conducted this experiment?

It was sort of similar to the economic game in the other paper. So what we do is we have a situation where somebody takes money from somebody else, so they behave in this “mean” way. Then, in one version of the game, the person who the money was taken from has the chance to retaliate by imposing a financial fine on the taker. In the other version of the experiment, a third person, who was not taken from has a chance to impose a fine on the taker. The person we’re really interested in is a fourth person who witnessed this whole thing and decides whether they want to punish the person who was in charge of punishing the taker. What we find is that in the first version of the experiment—where the person in charge of punishing the taker is the person who was taken from—people are much less likely to punish that person if they didn’t punish. In the version of the game where a third person is in the role of the punisher—if they don’t punish, they’re sort of more likely to then be punished by the participant in the second order way. So, one version of the experiment uses this economic game we set up, but we also had other studies in our paper where we use hypothetical scenarios. I would tell a story like, “This guy John was at a coffee shop and somebody vandalized property.” In one case, it was John’s property. And, in the other case, John is just a witness to this, so the property belongs to someone else. The question is then like if John does nothing, how much do participants think that’s bad and John deserves condemnation? We found that the participants think John deserves more condemnation if the vandalism was directed at somebody else’s property, than if it was directed at John’s property.

What’s the biggest takeaway from this research?

I think that the takeaway is basically that we see moralistic punishment from third party observers as something that’s normative. That’s not true of all punishment; that’s not true of retaliation, but it is true of moralistic punishment. If people are sort of interested in encouraging moralistic punishment, then I think that’s something we can take advantage of to try and encourage people in the role of the person who is a bystander, who saw someone get mistreated, to punish the transgressor. We can take advantage of the fact that it’s normative in a similar way that I was talking to you about earlier, which is that we can try and make sure people feel like their reputation is on the line and are aware of the fact that other people see it as normatively good to punish. If we want to reduce punishment, we could also try and take advantage of this. In terms of encouraging this behavior, it’s a useful social lever, and our data suggests there’s not a similar dynamic in the case of direct retaliation. People don’t feel like there is an obligation normatively to do that. Although you might think people retaliate too much when other people mistreat them, our data suggests it’s not going to make people any less likely to do that if they’re in private. Generally, people aren’t doing it in order to avoid condemnation from other people. They’re probably doing it for another, more intrinsic motivation.

If someone is a team leader, what is the most important finding from your research for running a team that is less judgmental and more cooperative?

One thing I will say is that “less judgmental” and “more cooperative” may not always be aligned. The judgment of people for not cooperating is sometimes one important way through which we maintain a norm for cooperation. I think the ideal thing in a team may be that you create a norm that you’re supposed to cooperate as a team, and if you don’t, people are going to judge you, and you are aware of that. I think the broader framework I would have for thinking about that situation is just that as a team leader, you might have the power to shape the norms and concretely think about the types of punishment and behavior you think are constructive. You can also think about which types are bad and maybe too judgmental. What I think our data suggests is that people kind of have a psychology where they could be open to the idea that it’s normative to punish in particular ways—and might even be willing to punish people for failing to punish. That is a psychology people bring to the table that you could try and work with to leverage your goals. It’s about encouraging the constructive level of punishment to create a team where there are strong norms for good behavior.

Jordan’s claim that it’s absolutely possible for virtue-signaling to contribute to our genuine emotion or moral decision making is interesting.It makes sense that we want to do the right thing, and part of that decision is to let the world know how virtuous we are (whether we realize it or not) Those that know me best are aware that i dedicated much of my life to raise my sister’s child, a child she was unwilling and unable to care for. For me, there is always a problem when trying to discuss any theory of morality.. what makes an action right, or what makes a person or thing good. Early on in the care of my niece, i was accused by some that my decision to care for her was in part to bolster my social standing (the term virtue signaling was not around in 1993). I always disagreed, with my view that my actions in regard to what was moral or ethical were never from a Kantian “what should i do” perspective, but rather a Aristotelian “what should i be” virtue ethics standpoint.I still stand behind what i thought 26 years ago.My conduct emanates from my personal moral values, and not duty based. Hypocrisy drives me absolutely bonkers, and i have learned that why it bothers some people is one of the questions that Jordan researches.At school, i gravitated to the ideas of Emile Durkheim and his social facts theory, which asserts that social facts are values, cultural norms, and social structures that transcend the individual and can exercise social control. I would guess that Jordan is familiar with Durkheim’s ideas as well. The problem that when you get down to it, it is never possible to measure or understand how all of the social facts in society interact with each other. Good work, Kambiz.

Still trying to figure out which Jordan I prefer – jordan peterson or jillian jordan. or maybe both!